It's ALL Connected

When AI gets connected to what matters, we ALL succeed!

In this edition:

My thoughts

Notable headlines

History: ELIZA (is AI fooling us?)

Coding, Tools, and Experiment

Pop AI Architecture

My Thoughts

Artificial Intelligence is bringing together so many disciples. A single person can now create an entire (simple) video game without having the skills of an artist to make the visuals or the knowledge of programming to code the logic used for the game. People are being enabled to do work they would never have attempted without the help of AI. This includes me. (The header image is generated by Mid-Journey1) Practically every day I come across a story of someone using these tools in a completely new way. I think we are seeing that the generative AI revolution is applicable to a wide variety of skills and needs. This too explains the extremely steep adoption of a tool like ChatGPT.

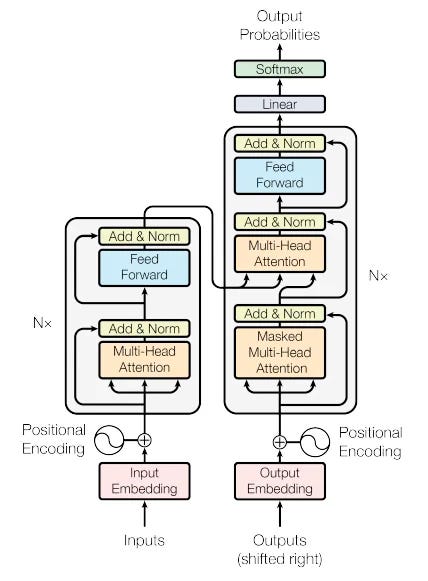

This month we saw the US Congress interrogate an AI leader, a draft of a European AI Act and now Google has made it abundantly clear that they have no intention of letting everyone else run the AI party. This last bit is particularly ironic because Google has been ahead of the AI curve for quite some time now. They actually kind of invented the key tech behind ChatGPT. Not only did they invent it, but they also contributed what they learned by open-sourcing it.

In 2017 this paper, Attention is ALL you need, was released thanks to Google. They even released it as open source so that everyone could take benefit from the Transformer Attention model.

https://research.google/pubs/pub46201/

Meta also has been particularly aggressive in making their models public (the license they use varies), and while not all of these are available for commercial purposes, great strides are being taken every day. I’ve covered some of their work on the YouTube Channel, but it’s hard to argue with the continually groundbreaking releases. Last week it was a language translation model that accurately translates over 200 languages, before that, it was Segment Anything (SAM), then tools for animated art, DINOv2 for computer vision, multi-modal AI learning, and the list goes on.

I believe wholeheartedly that the open path is the way forward. Looking at project velocity and what’s getting attention on sites like github.com, which allows you to see what’s popular at any given moment is a great indicator. Big companies like Microsoft and IBM will continue to work on projects that they keep internal, but even they recognize that their continued success has to incorporate open-source collaboration. Rumors are running around about Meta increasing their open-source stance and OpenAI might be preparing to open-source a language model. Stability AI taking the open-source approach from day 1 also reveals that no matter how successful the big companies are, everyone will have access to at least some open models.

The open-source availability is one part, being able to run what you want, where you want, and when you want it is another. The shift to cloud bases SaaS services over the last couple of decades has brought some tremendous advantages and new solutions to the market. However, the cloud is not the only place where AI is advancing. Last week I published another video on the YouTube channel experimenting with a tool called GPT4ALL. The tool installs with ease and makes upgrading fast and simple. The models provided for use in the tool all run locally on your own computer. They are not beating the well-known cloud options like OpenAI’s text-davinci-003 just yet, but they are already turning up scores that are quite high. They are also adding features. An update released this week just after I made the youtube video, allows you to drop in a PDF file and get answers.

This is the space I will be watching closely. Some argue that these open models are achieving high scores by simply imitating the responses of larger and better models. If these models continue to advance and demonstrate viability and utility on equivalent capability on PCs, mobile devices are next. Even if these smaller models need more work, we have many years of computing history that show us that smaller and smaller systems can achieve the same capabilities, so for me, it’s only a matter of how long it takes, not if it ever happens. The utility of models that run on smaller systems without ubiquitous internet is an essential development because there are still many places where the internet or systems are not yet reliable enough for mission-critical field capabilities.

Headlines

58% of people have heard of ChatGPT, but only 14% have tried it

Pew Research has shared that 42% of US adults haven’t even heard of ChatGPT yet. This is still early days. It’s not too late to get started.

Companies are concerned about keeping their secrets and in order to ensure this, they have completely blocked their employees from using several AI tools like ChatGPT and GitHub Co-Pilot. I doubt this means they won’t have AI tools, but it will take time for these companies to build systems that both protect secrets and unlock these new productivity tools for their employees.

Microsoft is bringing all the AI to tools and products

Microsoft is moving quickly to make Generative AI a pervasive feature in all of its products. Windows Co-pilot, Microsoft Fabric, Azure AI Studio, and more were announced last week. This feels like the 90s developer-focused energy I saw as a college student. The creation of tools is the first phase of this revolution.

History: ELIZA (is AI fooling us?)

In the mid-1960s, computer scientist Joseph Weizenbaum at MIT’s Artificial Intelligence Laboratory created the computer program ELIZA. Named after George Bernard Shaw’s character Eliza Doolittle from the play Pygmalion, the program was intended to imitate a Rogerian psychotherapist. (Client-centered therapy pioneered by Carl Rogers in the 1940s and 1950s). Rogerian therapy theorized that by providing a person with a supportive environment, they could feel safe expressing their thoughts and feelings without judgment. The ELIZA program would essentially take the person’s comments and restate them back to the patient and used a pattern-matching system, which resulted in some interesting outcomes.

Although it used this basic pattern-matching system, some users reported feeling as though ELIZA really understood them. Never having seen a computer that could react and seem to understand them so well, this was an incredible development. All it took was for the presence of some seemingly relevant responses to cause the people involved to overestimate how much the program really understood them.

The amazing thing about the development of ELIZA was this response it elicited from users and spectators alike. Brian Roemmele recently shared a video of a user interacting with ELIZA on Twitter, it’s an interesting to see how simple it was. According to Brain’s tweet, ELIZA is actually included and hidden away on most Apple OS X systems under terminal EMACS so you can play with it to if you have an OS X system.

Weizenbaum’s DOCTOR program had a small knowledge base to work from, which sometimes led to a generic response. Despite this lack of relevant responses, many users took it quite seriously even after Weizenbaum had explained how it worked.

This is a noticeable theme with users interacting with AI systems. As people, even though we are enabled to understand how it works, we want to give it more credit than it deserves. The lesson from the mistakes here is that while the program can be quite useful, we should be cautious not to overly credit the system with knowledge and understanding. This is the importance of creating benchmarks, tests and conducting a thorough analysis of what a system is capable of doing in a coherent and intelligent way. Finding the right answer is only part of an appropriately functioning system.

Weizenbaum himself was said to be “shocked” that his program was taken so seriously by its users. For those of us that are studying the technology, it’s important to recognize that we are providing a solution to users, and regardless of how good or bad technology may be, we must consider the psychological aspects and the user interactions with the system as we build, plan and use AI. Even as users, we must consider that even though the responses of the system and wonderous and exciting, there is still work to be done and it’s our responsibility to validate and ensure that the answer it provides and appropriate and coherent.

The reason I have brought ELIZA in to share with you today is because of what is now known as “The ELIZA Effect”. Users will attribute more intelligence to a system despite knowing that it is quite incapable of having the knowledge we know it to have. I believe this is just as applicable today as it was half a century ago. The new Large Language Models that we are building are still not well understand and we do NOT fully understand how they work.

Oddly enough, after building ELIZA, Weizenbaum became a critic of AI technology warning that he essentially created an “illusion-creating machine” and called for drawing a line between “human and machine intelligence”. This is an important part of AI history, and we would all do well to remember what has already been learned about both machines and humans. Keep experimenting, but be aware of the limitations and use a bit of skepticism in working with the tools. For the foreseeable future, you might consider using something a bit more critical than “trust, but verify”.

Coding, Tools, and Experiment

I mentioned earlier that I had created a video with GPT4ALL on the YouTube Channel, if you’d rather experiment with it yourself, I recommend it if you have a capable system.

If you are like me and you pay for the privilege to use ChatGPT Plus, make sure you have turned on all the features they made available. I’ve met with several friends recently who were unaware that the new features were released to them. I created a quick doc with some screenshots to show you how to turn them on.

I’m always finding interesting repos on GitHub, and this month is no exception. Here are the projects I’ve starred this last month and why they might be worth a look.

This project is working to make LLMs and document search, summary, and interaction 100% private by keeping it local.

This project is a framework for enabling conversations enabling specific domain expertise through LLMS.

Langchain is all the rage right now for extending LLMs, this project moves toward API-based integration. This is actually a set of APIs that make consuming the projects I highlighted last month easier to integrate.

As a DevOps guy, this one is particularly exciting. This project seeks to leverage AI as a helper to update a repo by planning, writing, and submitting a PR to fix a project.

Pinecone and LangChain: Pop AI Architecture

LangChain and Pinecone are popular right now because they enable developers to create powerful and differentiated applications that leverage the capabilities of language models, such as chatbots, question-answering, summarization, and more by coupling the two solutions together. Last month’s architecture post was a view of how these two components enable the directed extension of LLMs for specialized purposes.

A vector database is a way to store and search information through the use of vectors, Pinecone is getting much attention from the community right now as a provider of this capability. Vectors are lists of numbers that represent some features or attributes of data, such as words, images, videos, etc. Vectors can be compared by their similarity or distance in a mathematical space. A vector database can handle complex and unstructured data that traditional databases cannot, such as finding similar shoes given a shoe image. If you are more interested in learning about this, I would suggest a tour of Pinecone’s website tutorial on vector databases.

LangChain is a framework for developing applications powered by language models⁵. Language models are AI systems that can generate natural language texts based on some inputs, such as prompts, queries, or other texts. LangChain allows users to connect language models to other sources of data and to interact with their environment. LangChain also provides modular components and use-case-specific chains to make it easier to build applications with language models.

Subscribers get all the fun and paid subscribers get even more. Please don’t hesitate to drop a comment or question. I love hearing from you and we might even learn something!

As a paid subscriber, you can create a chat thread and start your own conversation! Take advantage of this exclusive access if you read this and have more questions. This is where community happens if we take the time to use all the great tools to learn and explore!

While I’m not quite sure how to use it now, I’ve also opened up the notes feature as yet another way to connect with my readers. This is an area of experimentation since it mixes will all the others on the substack notes platform.

This newsletter’s header image was created by AI with Midjourney prompt: “a robot version of Le Penseur's thinker sculpture. --v 5.1 --q 2 --ar 3:2 --v 5 --q 0.5”